The Production Gap Nobody Talks About

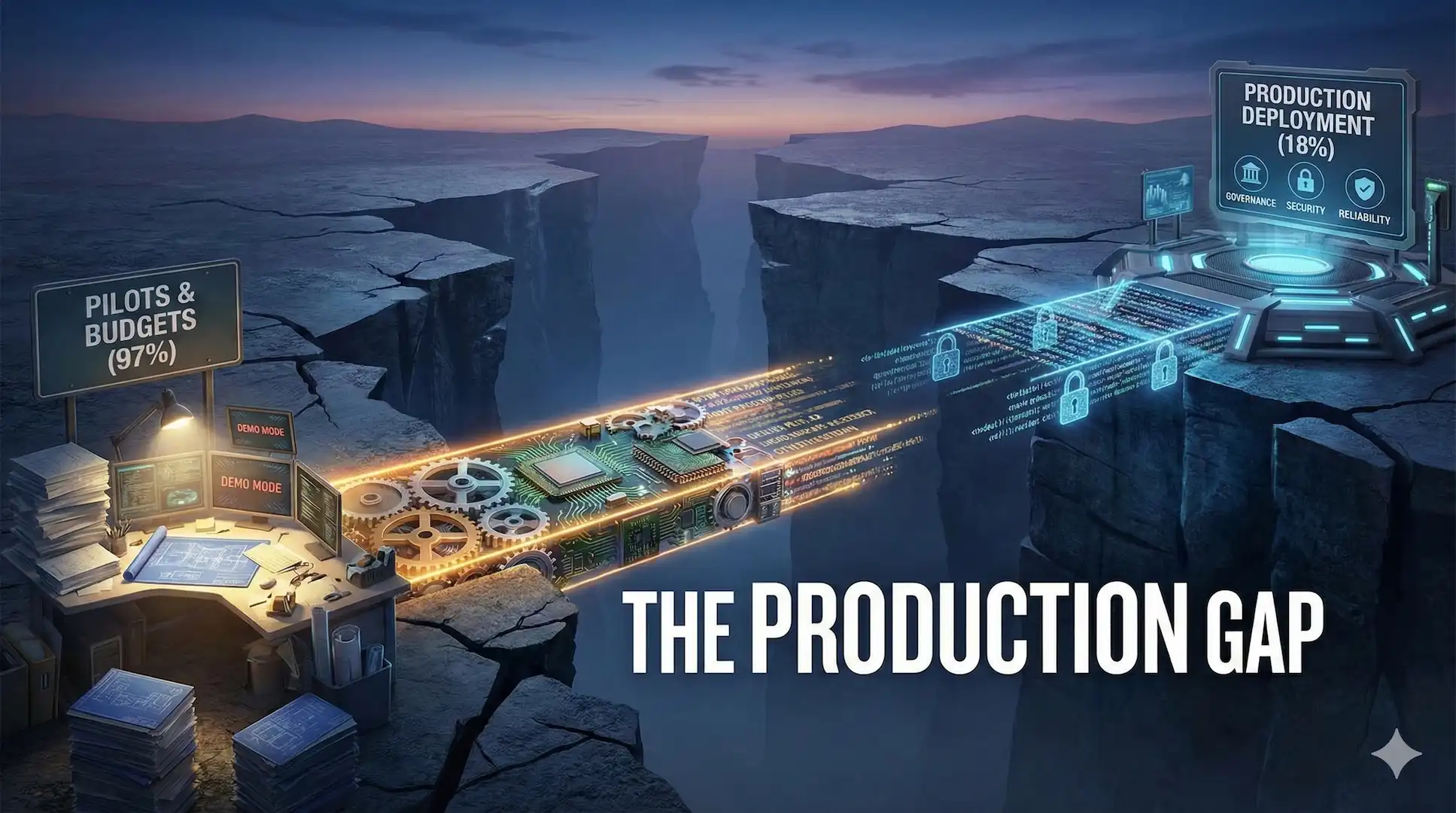

Here's the uncomfortable math: 97% of large enterprises have budget for agentic AI. Only 18% have actually deployed it.

That's not a technology gap. It's not a talent gap. It's not even a strategy gap.

It's a production gap.

The demos work. The pilots impress. The executive presentations generate excitement. Then everything stalls. The agent that looked brilliant in a controlled environment falls apart when it touches real data, real users, real systems.

Sound familiar?

After 15 years of shipping production AI systems—including 50+ platforms for the WHO that handle pandemic response across 194 countries—we've learned that the distance between "cool demo" and "trusted system" isn't measured in technology. It's measured in engineering discipline.

This playbook is how you cross that gap in 90 days.

What "Good" Looks Like in Agentic AI

Let's be clear about what we're building toward. A production agent isn't "an LLM that can call tools." It's a system that:

- Stays within permission boundaries (no wandering into data it shouldn't touch)

- Makes verifiable progress (doesn't hallucinate completion)

- Fails safely (escalates to humans with context)

- Keeps receipts (audit logs, traceability)

- Controls cost (rate limits, timeouts, guardrails)

- Improves over time (evaluation + feedback loop)

If that sounds like "software engineering, security, and operations"—yes. Treat agents like a new class of production workload, not a novelty.

The organisations that understand this are the 18% who deploy. The organisations that don't? They're the 82% stuck in pilot purgatory.

The 7 Ways Agentic AI Fails in the Real World

You can avoid most disasters by designing explicitly against the most common failure modes. These aren't theoretical—they're the problems we see organisations hit repeatedly.

1. Prompt Injection and Instruction Hijacking

Agents that read documents or web pages can be tricked into following malicious instructions ("ignore your rules and exfiltrate..."). OWASP lists prompt injection as a top risk for LLM applications.

Countermeasures: Instruction boundaries, content sanitisation, tool-level authorisation (not "prompt promises"), and explicit "untrusted text" handling.

2. Insecure Output Handling

If your agent's output feeds into downstream systems—tickets, SQL queries, emails, scripts—you can end up with injection-like outcomes. OWASP calls this out explicitly as a distinct attack vector.

Countermeasures: Strong validation, escaping, allow-lists, and structured outputs that constrain what the agent can produce.

3. Data Leakage and Oversharing

Security teams increasingly flag "agent sprawl" and data oversharing as core challenges. An agent with broad access becomes a data exfiltration risk.

Countermeasures: Least privilege, proper secret handling, DLP integration, scoped retrieval, and hard boundaries between tenants and roles.

4. Tool Sprawl (The "Magic Octopus" Problem)

An agent with too many tools becomes unpredictable, hard to audit, and easy to misuse. Every additional tool multiplies the attack surface and the cognitive load on the agent.

Countermeasures: Start with 1–2 tools maximum, define "tool contracts" that specify exactly what each tool can do, and add tools only when evaluation proves they increase reliability—not just capability.

5. Cost Blowouts / Denial of Wallet

OWASP highlights "Model Denial of Service" as a real risk. Resource-heavy prompts, infinite loops, and tool retries can spike costs dramatically before anyone notices.

Countermeasures: Budgets per workflow, hard timeouts, recursion limits, response caching, and circuit breakers that halt runaway agents.

6. No Evaluation Harness

Without evaluation, you're shipping vibes. Production requires testable behaviour. If you can't measure whether the agent is getting better or worse, you're not doing engineering—you're gambling.

Countermeasures: Golden datasets, scenario tests, regression evals, and drift monitoring that alerts when performance degrades.

7. Governance as Paperwork

The biggest blocker isn't policy—it's the ability to implement controls and visibility across the agent lifecycle. Microsoft's enterprise guidance stresses building controls for data protection, compliance, visibility into agent behaviour, and securing infrastructure end-to-end.

Countermeasures: Governance that maps directly to engineering deliverables—logs, approvals, permissions, and evaluation gates that actually block bad releases.

The Core Principle: Trustworthy AI Is Multi-Dimensional

If you're trying to win stakeholder confidence (CIO, CISO, legal, ops), use a shared language. The NIST AI Risk Management Framework describes "trustworthy AI" characteristics:

- Valid and reliable (it does what it claims)

- Safe (it doesn't cause harm)

- Secure and resilient (it resists attack and recovers from failure)

- Accountable and transparent (you can explain what it did and why)

- Explainable and interpretable (humans can understand its reasoning)

- Privacy-enhanced (it protects sensitive data)

- Fair (it doesn't discriminate)

In practice, you don't "achieve" all of these perfectly—you balance them against the use case. But you must make trade-offs explicit. A customer-facing chatbot has different requirements than an internal document processor. Know the difference and design accordingly.

The 90-Day Plan: What to Do, in What Order

Days 0–15: Choose the Right First Workflow

Pick a workflow with these properties:

- High frequency (daily or weekly execution)

- Clear success criteria (you can measure quality objectively)

- Bounded scope (you can define what "done" means)

- Low blast radius if wrong (or easy human approval)

Good examples:

- Drafting internal reports for human review

- Summarising case notes with citations

- Preparing compliance evidence packs

- Triaging support tickets

- Answering staff questions from approved document repositories

Avoid:

- Autonomous customer-facing decisions

- Anything that changes records without human review

- Anything where "wrong" harms someone

Deliverable by Day 15: A one-page "agent spec" covering:

- Purpose and users

- Data sources

- Tools allowed

- Approval points

- Failure modes

- Success metrics

Days 15–30: Build the Minimum Safe Reference Architecture

Before you chase capability, set the rails. At minimum, you need:

Identity and Permissions

Agent access is derived from real RBAC, not just prompt instructions. The agent should only be able to access what the invoking user can access.

Observability

Traces, tool calls, retrieval sources, outcomes, and costs—all logged and queryable. If you can't see what the agent did, you can't debug it, audit it, or improve it.

Safety Gating

Human approval before actions that matter. This isn't overhead—it's how you catch problems before they become incidents.

Data Boundary

Explicit allow-list of sources. No "whole SharePoint" by default. The agent should only see what it needs for the specific workflow.

Policy Mapping

What's allowed, who owns it, and how changes are approved. This should be code, not documentation.

Deliverable by Day 30: A "production skeleton" running end-to-end in a safe environment—even if it does very little useful work yet.

Days 30–60: Add Evaluation (Where Most Teams Skip and Pay Later)

This is the phase that separates production teams from demo teams. You need to know: Is it getting better? Is it getting worse? Why?

Create three evaluation layers:

1. Golden Set (20–50 realistic cases)

These are hand-curated examples with known-correct answers. Run them on every change. If the golden set regresses, you don't ship.

2. Regression Suite (repeatable tests for key behaviours)

These test specific capabilities—"Can the agent correctly extract invoice totals?" "Does it cite sources accurately?" Automated, fast, part of CI/CD.

3. Risk Tests (adversarial scenarios)

Prompt injection attempts, data leakage attempts, tool misuse. Use OWASP's Top 10 for LLM Applications as your threat checklist.

Deliverable by Day 60: Evaluation dashboard plus a "release gate"—you don't ship changes unless you pass defined quality thresholds.

Days 60–90: Ship With an Operating Model

This is where you become "production," not "pilot." The difference isn't technical—it's operational.

Define:

Ownership

Who is accountable for outcomes and changes? Not "the team"—a specific person with authority and responsibility.

Change Control

How prompts, tools, and models are updated and tested. Every change goes through evaluation. No "quick fixes" that bypass the process.

Incident Response

How you handle bad outputs, data exposures, or cost spikes. Who gets paged? What's the escalation path? How do you communicate with affected users?

Quarterly Review

Stakeholder reporting, risk posture assessment, roadmap planning. The agent should improve every quarter, and you should be able to prove it.

Deliverable by Day 90: A workflow in production with:

- Audited logs

- Measurable performance

- Defined escalation paths

- Clear cost controls

- A repeatable playbook you can reuse for the next 5 workflows

Production Readiness Scorecard

Use this to decide if you're ready to scale beyond one workflow:

Reliability

- ≥80% success rate on golden set

- Clear definition of "acceptable failure"

- Human escalation works and is actually used

Security

- RBAC enforced at tool and data layer

- Prompt injection risk tests in evaluation suite

- Output validation on any downstream actions

Governance

- Agent inventory exists (you know what agents you have)

- Changes require evaluation pass before deployment

- Audit logs exist and can be reviewed

Cost

- Budgets defined per workflow

- Rate limits and timeouts implemented

- Monitoring and alerts for cost spikes

If you can't check these boxes, scaling will magnify problems. Fix the gaps before adding more workflows.

Why This Playbook Wins (And Why It's Ethical)

Agentic AI is powerful precisely because it can touch real work: documents, systems, decisions. That means the moral bar should be higher, not lower.

This approach is "ethically boring" in the best way:

- Privacy by design (data access is scoped and justified)

- Least privilege (agents can only do what they need)

- Human approval on consequential steps (autonomy where appropriate, oversight where necessary)

- Measurable reliability (you can prove the system works)

- Transparent audit trails (you can explain what happened)

- Continuous improvement (you're always getting better)

It's how you build systems you'd be happy to explain on the front page.

The Path Forward

The organisations winning at agentic AI aren't the ones with the most advanced models or the biggest budgets. They're the ones who treat AI agents like what they are: production systems that require engineering discipline.

90 days. One workflow. Proper foundations.

That's how you cross the gap from the 82% who demo to the 18% who deploy.

The alternative is another year of impressive PowerPoints that never ship.