Key Takeaways:

Anticipatory Service: Predictive engines can foresee customer needs by analysing historical behaviour, allowing businesses to stock or suggest products proactively.

Advanced AI Techniques: Tools like LDA, SVD, and Factorisation Machines are critical for breaking down complex, sparse datasets and transforming them into actionable insights.

Personalization at Scale: By clustering similar items and users, these recommendation systems deliver tailored suggestions that enhance user experience and drive sales.

Competitive Edge: While major companies leverage these technologies to dominate their markets, smaller players can also harness them to innovate and differentiate themselves.

Evolving Business Models: The integration of AI in predictive recommendation not only refines long tail sales models but also sets the stage for a future where personalized service becomes the norm.

○

If I could predict what you wanted before you walked into my shop, I could make sure I had it in stock when you arrived and sell it to you.

Imagine as a skateboarding geek in a world where geeks are back in fashion, you scoot into the comic store and the nerd behind the counter offers you a 1995 Spiderman 59. Not only does the owner know you well enough to know what is missing from your collection, they have actually kept it behind the counter just for you!!!

This kind of predictive recommendation is the Holy Grail of many long tail sales models. A predictive engine that can figure out what you will buy and have it ready and waiting for you, when you step through the door / open your browser.

AI predictive suggestion engines are can be irritating when they get it wrong, they may even seem quite trivial, but a group of companies are silently becoming extremely wealthy and running rings around the competition by doing this well. So what tools are available and how are they doing it?

History as a predictor of the future -

For the last three days you have lived off delivery pizza, including cold leftovers for breakfast. Now our predictive analytics engine steps up suggests what you should eat tonight. By looking at your history, our engines knows exactly what you like, and oh dear it looks like pizza for you again tonight....

Classification and similarity clustering (Item Hierarchy) - Mmm ok lets agree that we do like Pizza, but not every night and try that again. By grouping / clustering similar types of food together our predictive recommendation engine knows that you are a Garlic Bread and Cheese person. We seem to be stuck in a rut, surely we can do better ...

Product and trending popularity - seems that you are looking for better suggestions, so rather than studying you, we can look at everyone else to see what is popular right now. Curry is very popular tonight so our engine is going to suggest a nice Tikka Masala.

Profiling you - hopefully we had a win with the curry, and hey if we did not get that right then we just learned that maybe you do not like spicy food. When it comes to profiling, all information can be useful. So we are going to start collecting everything we can, likes, dislikes, sex, age, where you live and as much of your browsing history as we can get. We will use this to categorise you with similar people and then look at what is popular and trending in your profile group.

Putting it all together with AI - So we are going to look at your history, your profile, how much you spend and cluster you with similar people. We are also going to cluster our food together, and look at what is trending. We put all that together, but it is starting to get complicated, not least because all this data changes on a daily basis. We need supervised learning that can keep up with the the growing flow of information.

At this point we do need to get technical. We have all of this information and maybe if we sit teams of people to sift through it we could really make some smart suggestions, but if we want to do it without teams of analysts we need AI. Let's look below at what tools are on offer.

Meet LDA and SVD

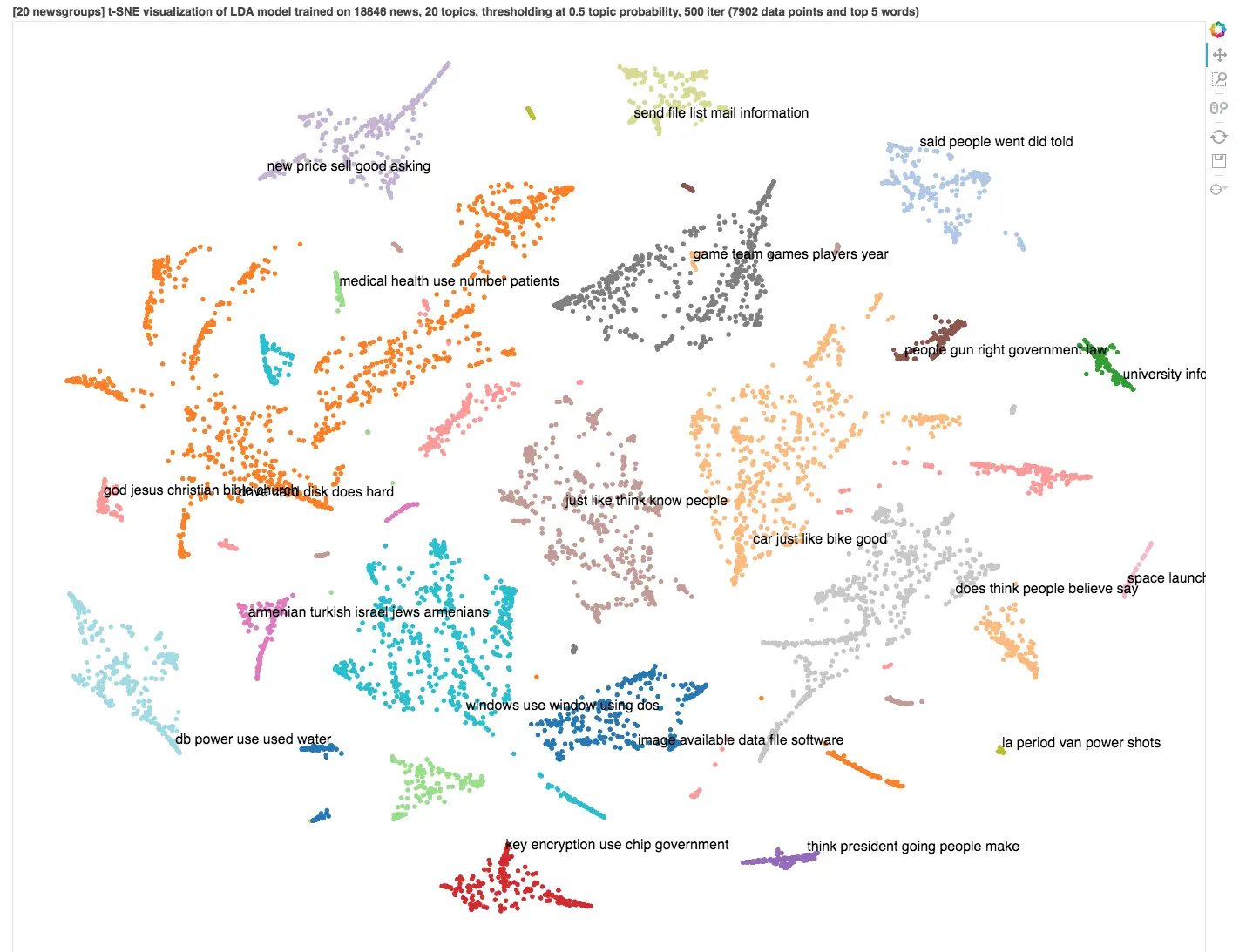

LDA short for Latent Dirichlet Association

LDA is easiest to understand with a practical example analysing the following sentences

- I ate an orange and peach smoothie for lunch

- I like to eat cheese and chocolate.

- Puppies and lambs are cute.

- My friend bought a puppy today.

- Look at this pretty chinchilla eating a piece of pie.

LDA is a way of identifying the topics in these sentences.

Sentences 1 and 2 are about food (Topic A)

Sentences 3 and 4 are about animals (Topic B)

Sentence 5 is about animals and food (Topic A and Topic B)

So LDA is a way of us breaking down free text into manageable taxonomies automatically without having to pre-create the taxonomy first.

LDA actually goes a step further, and as well as creating the categories it counts the words and assigns weights / percents to the categorisation. eg sentence 5 is 66% about food since there are two food related words to the one animal word.

SVD short for singular-value decomposition

Once we have mapped our information into vector space we end up with some pretty complex and large n-dimensional matrices which are mostly composed of zeros. Without going into the heavy maths SVD allows us to reduce the large multidimensional matrices into a simpler representations, which are still suitable for processing through a neural network

Factorisation Machines

Factorisation Machines are on the cutting edge when it comes to recommendation machines. They are currently the most effective and powerful engines we have at predicting what to suggest to you based on all of the above. When it comes to real life, these are the algorithms that are most successful.

In essence a Factorisation Machine is a supervised learning algorithm that can be used for for both classification and regression tasks.

Factorization machines were introduced by Steffen Rendle in 2010. The idea is to model interactions between features, ie taking all the things we know you like or dislike and then filling in the blanks where we do not have data.

The problem with pre FM models is that we have plenty of metadata, but we are not able to utilise that data effectively, with FM no training examples are required in the model parameters, making the models much more compact. In essence pre FM the data is still too big. The problem is that we are battling large scale sparse data sets i.e. most of our data is packed with zeros. The more categories we create the more zeros we have. Factorisation reduces the tensors by an order and prevents them from scaling out of control as the range of inputs increase.

Summary

Predictive engines are increasingly giving the giants like Netfllix and Amazon a huge edge, but these techniques are available to smaller companies too, and their applications range far and wide. When it comes to making the most of these technologies its starts with first knowing what is the possible, and hopefully this introduction will give you some ideas.